- Home

- Articles

- Fundamentals

- Distributed Systems – Pros, Cons & Limitations

Distributed Systems – Pros, Cons & Limitations

Distributed Systems for Beginners: Why You Can’t Just Use One Server

In the world of modern web applications and cloud services, have you ever wondered why platforms like YouTube, Uber, Spotify, or Facebook don’t just run everything on one super-powerful server? The answer lies at the heart of distributed systems — a fundamental design that powers the internet as we know it today.

What Is a Distributed System?

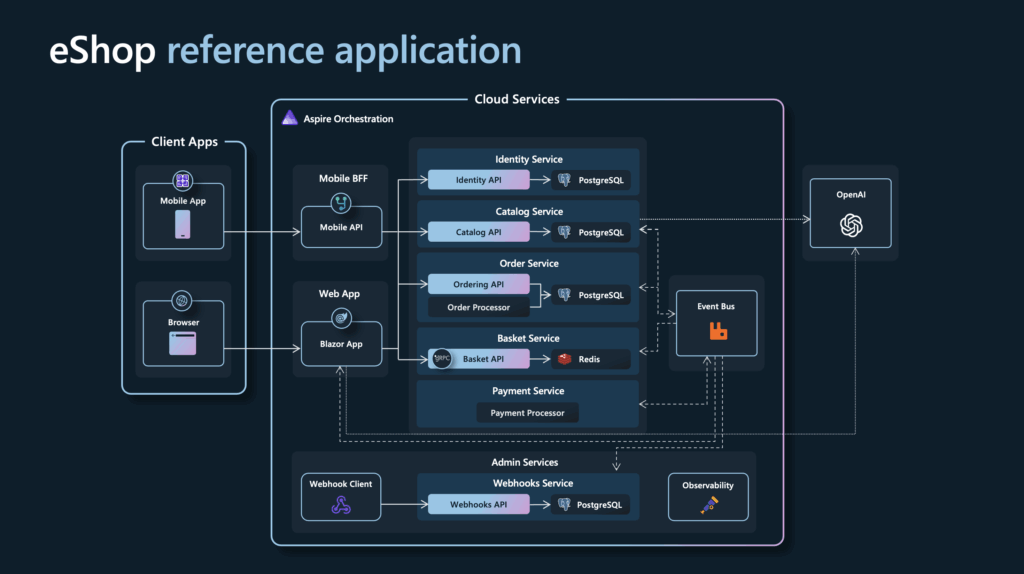

A distributed system is essentially a collection of multiple computers (called nodes) that work together seamlessly to behave like a single system from the user’s perspective. These nodes communicate through networks using protocols such as HTTP, gRPC, or message buses to coordinate tasks, share data, and deliver results instantly.

For example, when you use YouTube, one service streams video, another suggests what to watch next, and yet another manages comments. They all run independently but cooperate flawlessly to provide a smooth user experience. With Uber, your location tracking, driver matching, and pricing calculations are handled by separate services working in tandem. This division of labor allows enormous platforms to scale and maintain reliability.

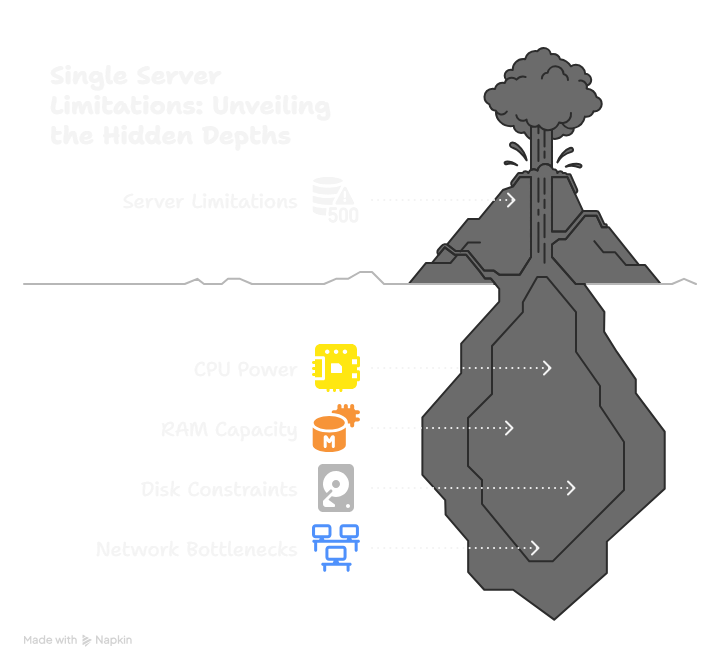

Why Not Just Use One Server?

Most applications start out simple: a single server running everything — the app, the database, all the logic. Up to a point, this is fine. But as your system’s traffic grows, just upgrading that one server with more CPU, memory, or storage — called vertical scaling — hits limits. It’s like widening a highway: after a certain width, you can’t physically add more lanes. Plus, a single server is a single point of failure — if it crashes or becomes overloaded, your entire service goes down.

Key limitations of a single server include:

- Finite CPU power (how many computations it can do)

- Limited RAM (how much data it can keep in immediate memory)

- Disk space and speed constraints

- Network throughput bottlenecks

When you hit these ceilings, performance degrades, requests time out, and crashes happen randomly.

The Power of Horizontal Scaling

Distributed systems solve this by adding more servers — horizontal scaling — rather than just beefing up one machine.

Imagine instead of one chef with superhuman abilities in a single kitchen, you have multiple chefs in many kitchens cooking together. The workload is spread, making the system more scalable, fault-tolerant, and flexible.

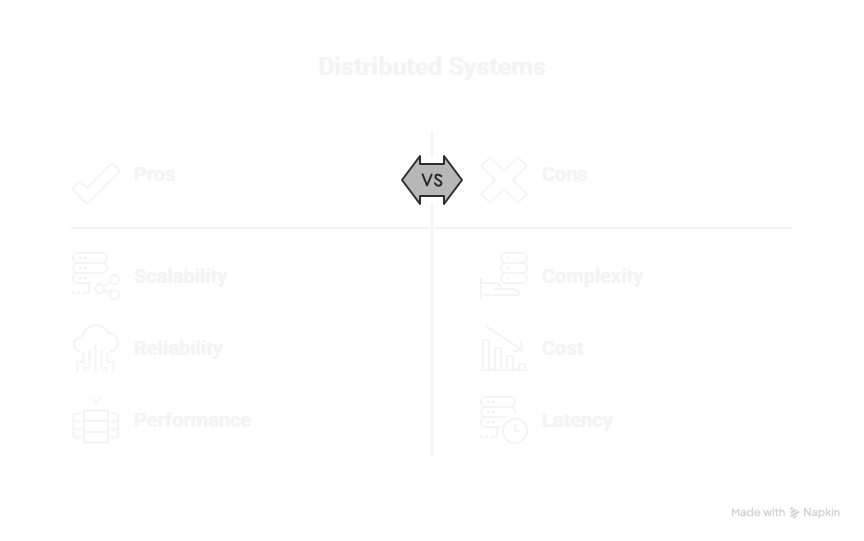

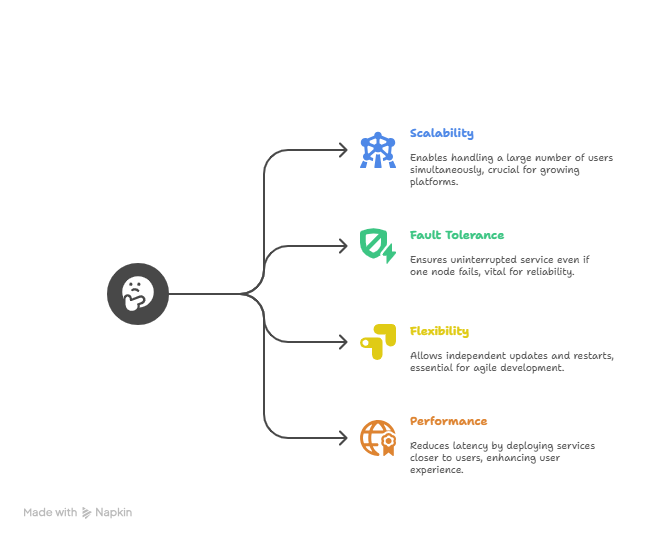

Advantages include:

- Scalability: Handle thousands or millions of users simultaneously

- Fault tolerance: If one node fails, others keep running uninterrupted

- Flexibility: Update or restart parts of the system independently

- Performance: Deploy services closer to users in different regions for lower latency

Trade-Offs and Challenges

Distributed systems aren’t a silver bullet. They introduce complexity with numerous moving parts, making debugging and deployment more challenging.

You need sophisticated tools for:

- Logging

- Tracing

- Monitoring

More powerful? Yes, but also more dangerous if not managed carefully.

In microservices (distributed systems), I could observe in my past experience a simple problem that was bloated to the big, big problems. For example, authorization. Authorization in a monolithic application is merely a simple piece of functionality (e.g. as a middleware in ASP.NET applications), usually taken out of the box, and that’s it. In distributed systems, it is usually a different service that has to respond behind the reverse proxy. You need to manage the service, write tones of code and make sure it is not a bottleneck to your system (or a security threat). It is many times more complex than a simple internal auth functionality.

Next Steps

We will explore more about eShop from Microsoft example on .NET Aspire, Microsoft’s new stack for distributed development, and generally, we will speak about the installation of the system.